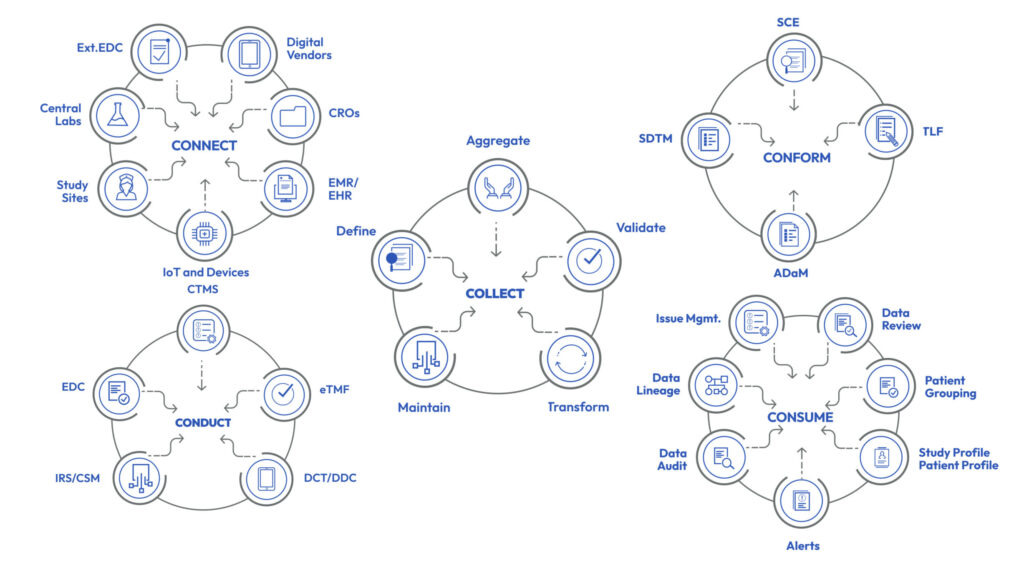

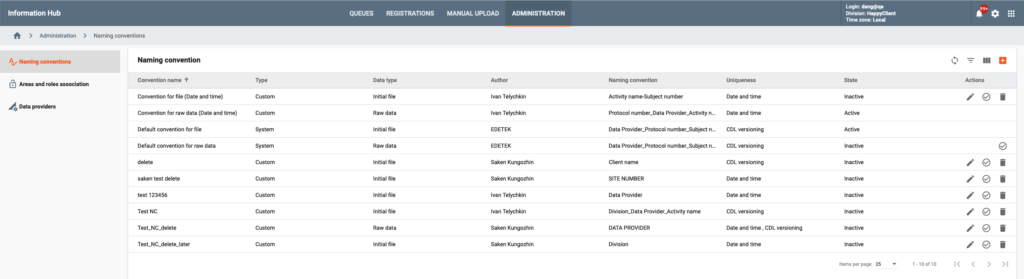

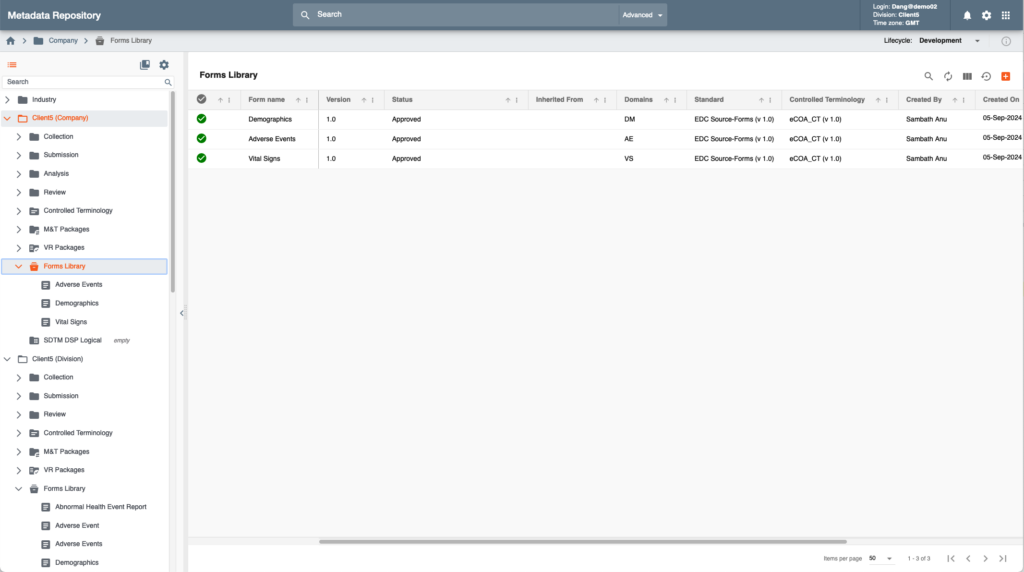

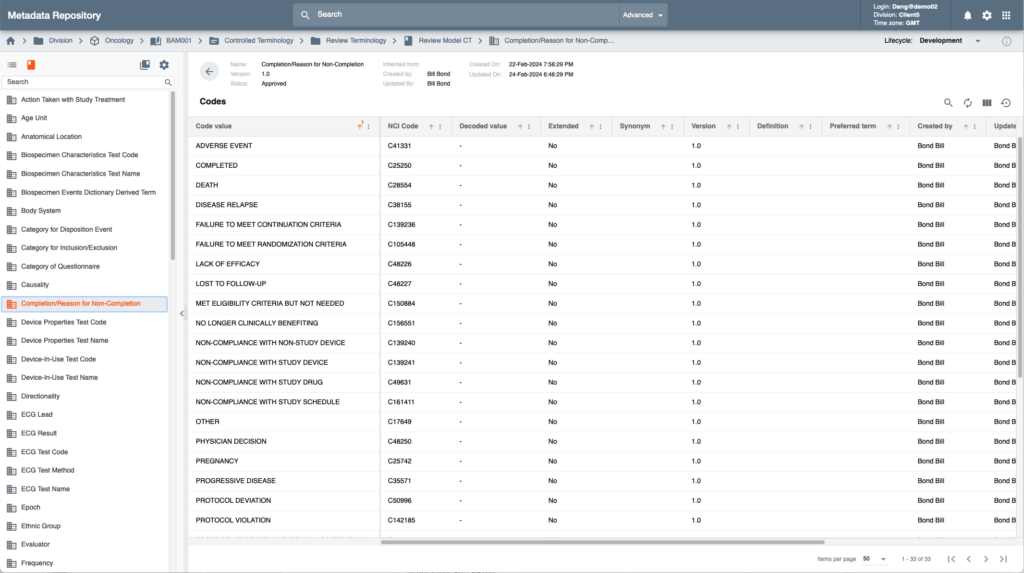

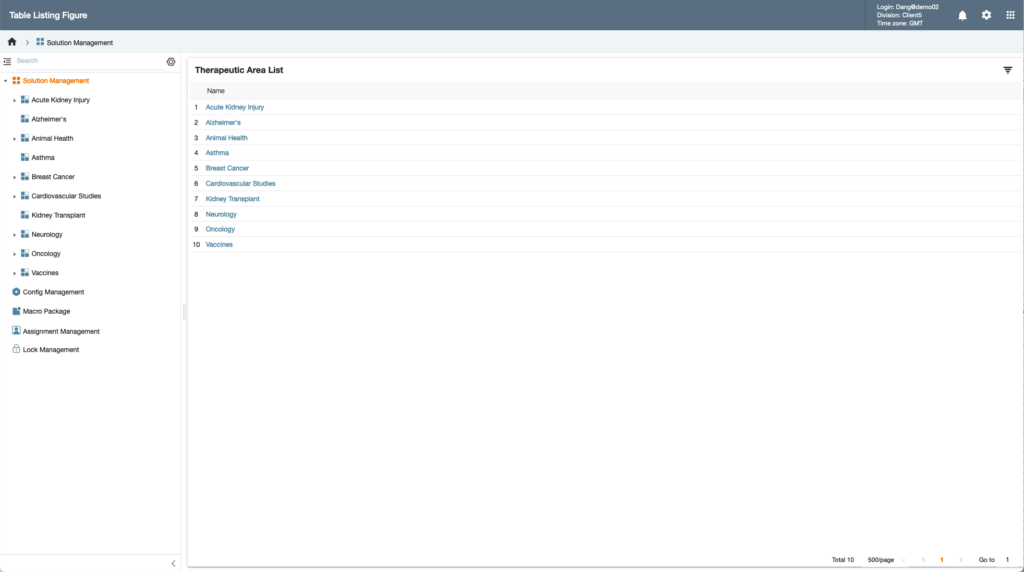

The Intelligent Metadata Hub provides comprehensive metadata stewardship and effective configuration of studies using Industry, Company or Therapeutic Area standards across collection, review, analysis and custom scientific and operational metadata.

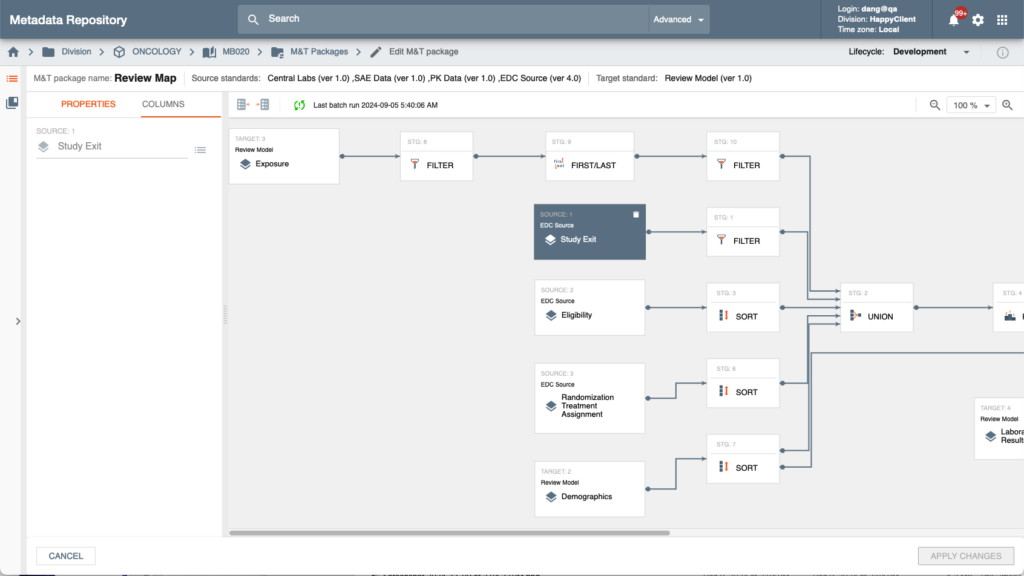

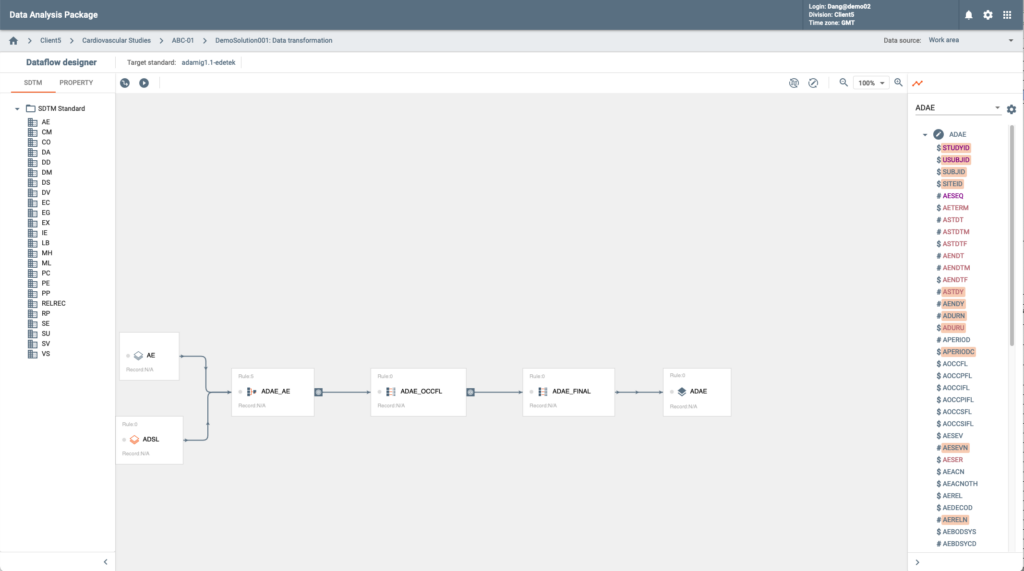

The system offers a state of the art powerful graphical designer to manage and build data transformations and validations rules that require no software coding by end users. These features significantly reduce time and costs of study initiation tasks while powering Platform’s Clinical Data Pipeline and populating Unified Data Repository.

Metadata stewards have access to a comprehensive set of tools to manage metadata and business rules including versioning, life cycle management, approval, auto notifications and impact analysis.

Intelligent Metadata Hub also enables cross ecosystem centralized metadata management by offering tools for eCRF, ePRO and eCOA designs that can be automatically populated for various data collection systems inside and outside of EDETEK’s R&D Cloud.

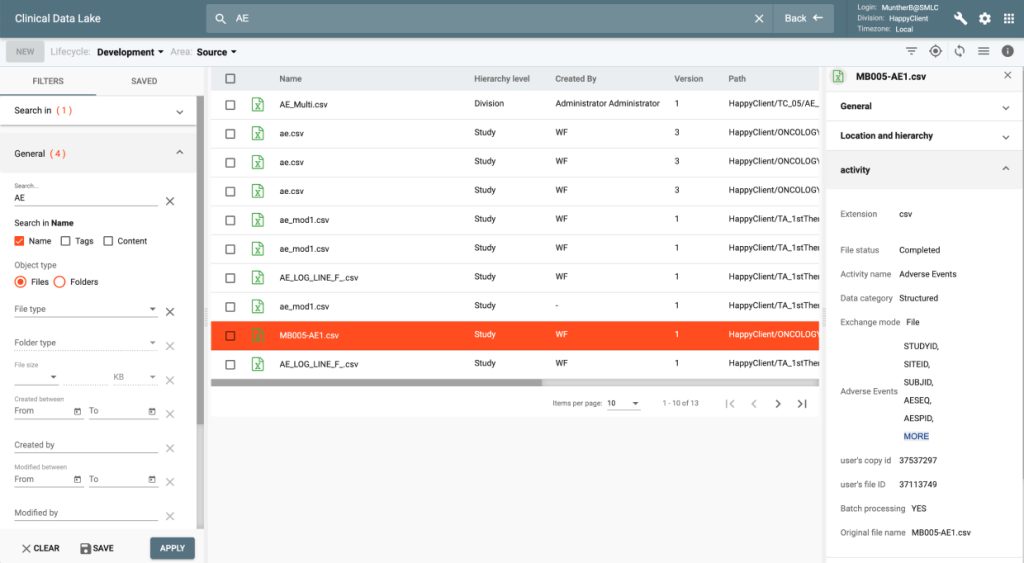

All data files and documents ingested into CONFORM™ as well as the ones produced within the Platform are stored in CDL.

CDL offers cataloged storage and tools for all study data ingestion, standardization, cleansing, monitoring, review, analysis and AI/ML modeling.

CDL provides storage, manipulation and viewing of the variety of medical file types including advanced medical imaging, sound and video files.

The product enforces GxP rules for clinical data handling with strict access management, version controls, workflow approvals and event logging of all actions in the system

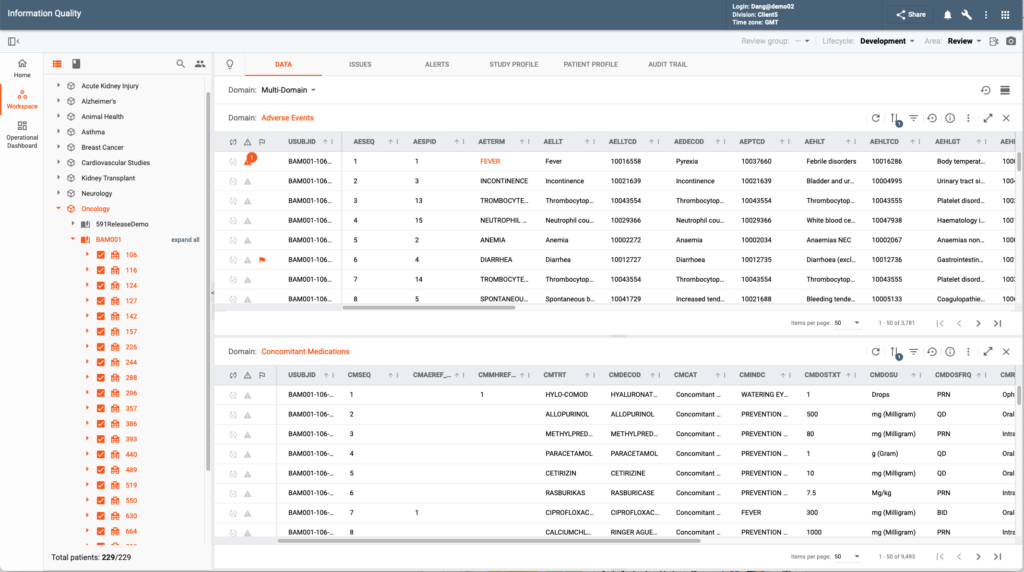

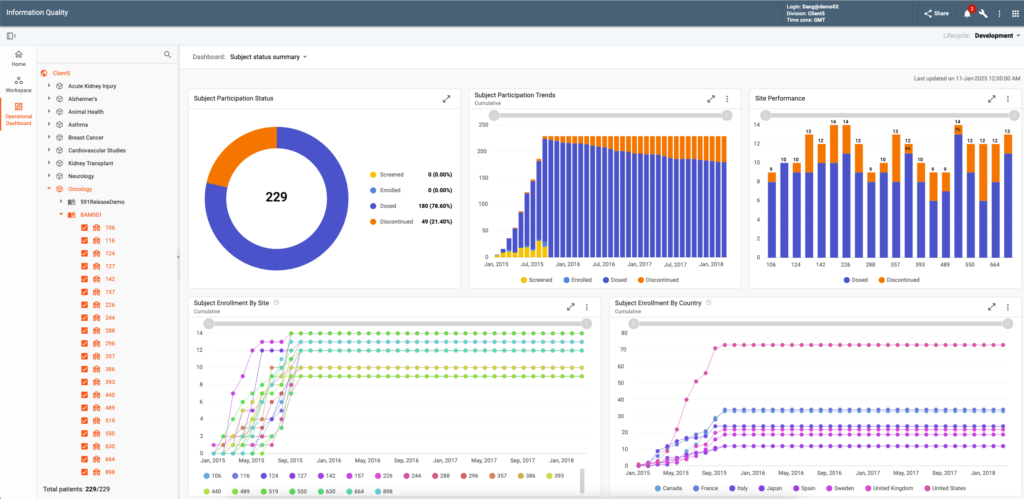

The Clinical Study Quality Management, Review and Analytics (IQ) redefines data management, review and quality management in life sciences with an integrated platform that supports continuous data ingestion and aggregation, data cleaning with advanced quality checks, role-based data review utilizing intuitive visualizations and collaborative workflows, and monitoring of clinical and operational data.

The system ensures superior data quality, enables enhanced decision-making, offers visibility into patient safety and efficacy signals, and evaluates study and sites performance in a regulatory compliant environment. The platform centralizes issue management for study, site, and patient-level challenges, automatically recording and managing issues from internal and external sources, such as EDC queries and protocol deviations.

Flexible workflows enable collaborative, role-driven resolutions, ensuring streamlined oversight and timely corrective actions. Operational dashboards provide high-level insights, while study and patient dashboards support real-time monitoring of compliance, efficacy, and safety. Advanced filtering and blinded data presentations enhance secure and efficient data reviews, boosting productivity and maintaining data integrity.

The Unified Data Repository (UDR) is a highly adaptable and configurable clinical data repository structurally designed to support cleaning, review, monitoring and analysis of data effectively and efficiently regardless of studies’ complexities, size, in-flight study amendments and frequency of data refreshes.

UDR is deeply integrated with Platform’s Intelligent Metadata Hub providing one or many data models for individual studies depending on the corporate business process and business applications and functions the study utilizes in the Platform.

Metadata integration allows for reuse of enterprise or TA-based standards and, when necessary, unparalleled flexibility of customization of the data model to align with unique business processes and evolving study designs without requiring any software coding.

Supporting EDETEK’s R&D Cloud open eco-systems, UDR fosters smooth interoperability, creating a cohesive and efficient data ecosystem. Its uniquely designed versatile database design accommodates a wide array of standards and structures, ensuring usability across various domains while enabling automated and continuous study data refreshes.

UDR offers data lineage across data models, delivering comprehensive traceability and efficient data processing from ingestion to analysis. As a centralized repository, it ensures reliable data storage and facilitates seamless retrieval for analytics, reporting, and downstream applications utilizing the latest advancements in cloud and database technologies.

The Clinical Data Management (CDM) module streamlines data cleaning, curation, and quality assurance across all trial phases and trials of any complexity. Operational dashboards, summaries, configurable data review plans with define critical data points and patient tracking enhance oversight, while advanced discrepancy detection and resolution automation expedite data cleaning processes.

CDM reviews data via standard and configurable custom data listings. These listings have numerous assisting functions to filter data, flag and comment on individual data records, etc. Users can look at the data grouping it by site or subject(s) and/or by dynamic groups of subjects configurable with business or medical criteria. CDM users also have access to various study and subject-based data visualizations to identify potential incorrect entries that can be turned into site queries.

Major productivity boost is achieved with the CONFORM™ Flexible Validation Rules that users define with zero-coding or by simply reusing existing library rules. These rules enable cross data provider and cross-domain complex validation, ensuring data accuracy and compliance. The rules are automatically executed during data refresh.

Issues that are turned into site queries can be automatically sent to the source EDC systems (this functionality is supported with several EDC vendors including EDETEK’s own EDC).

Robust audit trails and data lineage (across data models, such as Source/Raw to Review) tracking provide transparency and traceability throughout the data lifecycle. Features such as data exports, snapshots, and DCO (data cut off) support efficient milestone-based cleaning and analyses, empowering timely, reliable decision-making.

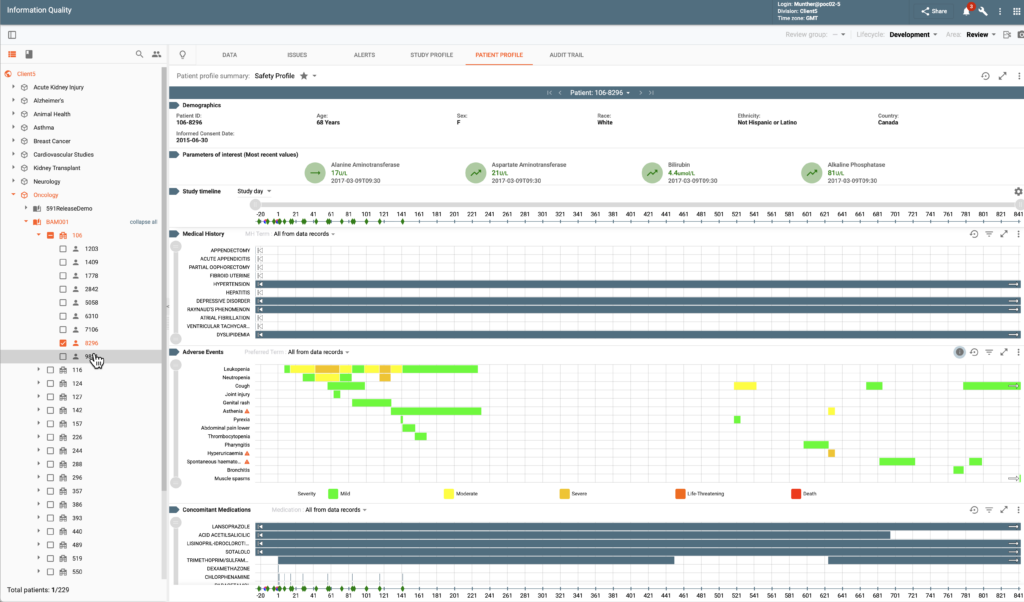

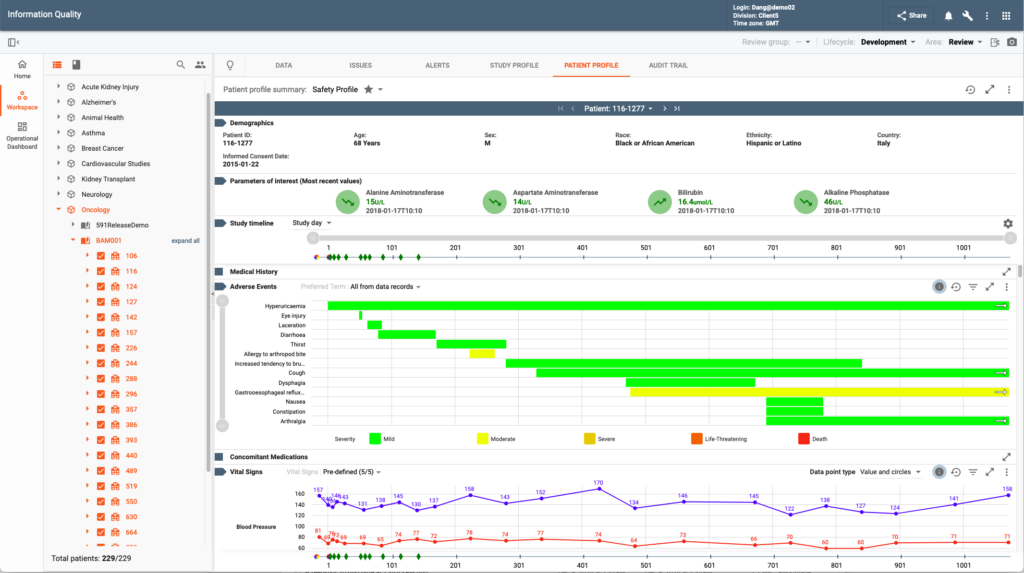

Data Visualization & Analytics (DV&A) empowers all data reviewers and statisticians with real-time analysis and intuitive graphical tools. Functionality is provided via Study Profiles and Patient Profile Summaries.

Study Profiles aggregate data trends, automatically flagging deviations from expected thresholds. A diverse set of visualization options are available facilitating protocol-specific data review and insights. Examples of available charts include line charts, waterfall plots, swimmer plots, forest plots, spider plots, Kaplan-Meier curves, consort charts, scatter plots, and box plots. Charts can be custom configured for the needs of particular indications and studies.

Patient Profile Summaries offer detailed longitudinal views of subject’s data. A number of pre-build profiles are included in the system. Users can adjust many configurable parameters to achieve custom profiles specific to their business needs.

Numerous additional features assist users in their data review tasks: dynamic filtering and visual markers simplify pattern recognition, color-coded new or changed data since the last review improve process efficiency, ability to publish a data issue for questionable data points saves time on navigation and/or communication with CDM, charts can be exported as a presentation in PDF, etc.

CHAT.IQ, a generative AI-powered assistant, revolutionizes clinical data interaction through dynamic, conversational exploration and analysis. Users can pose complex questions like, “What are the trends in adverse events for treatment X?” and receive instant, interactive visualized insights. This real-time analysis fosters a deeper understanding of clinical data, accelerating data-driven decision-making.

Seamlessly integrated with the CONFORM™ ecosystem, CHAT.IQ enhances collaboration and simplifies the interpretation of complex datasets, driving innovation and efficiency in clinical research. CHAT.IQ features an asynchronous predictive model that proactively alerts users to unseen patterns and critical insights.

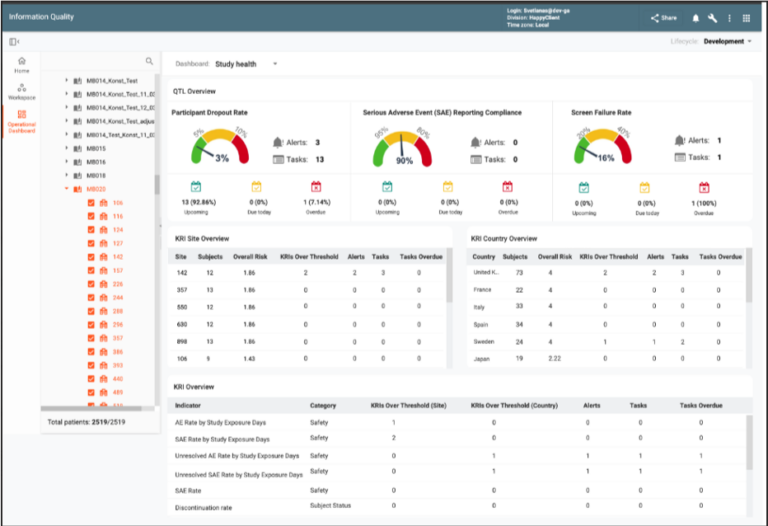

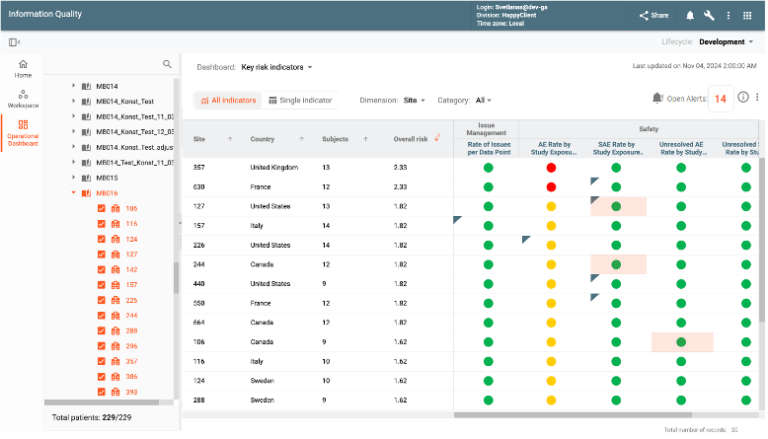

Risk-Based Monitoring (RBM) enhances study oversight through Key Risk Indicators (KRIs) and Quality Tolerance Limits (QTLs). Automated notifications for high-risk indicators prompt quick responses to identified issues. The module provides insights into study, country, and site-specific trends, enabling corrective actions to mitigate potential risks. Integrated dashboards combine safety, efficacy, and operational data for actionable insights, ensuring proactive risk management and regulatory compliance.

CONFORM™ provides a library of KRIs and QTLs that can be quickly utilized by the sponsors and individual studies. The library is frequently populated with new entries.

New KRIs can be constructed by end users utilizing an array of metrics that CONFORM™ populates automatically. Additional metrics and KRIs can be constructed by the available EDETEK services.

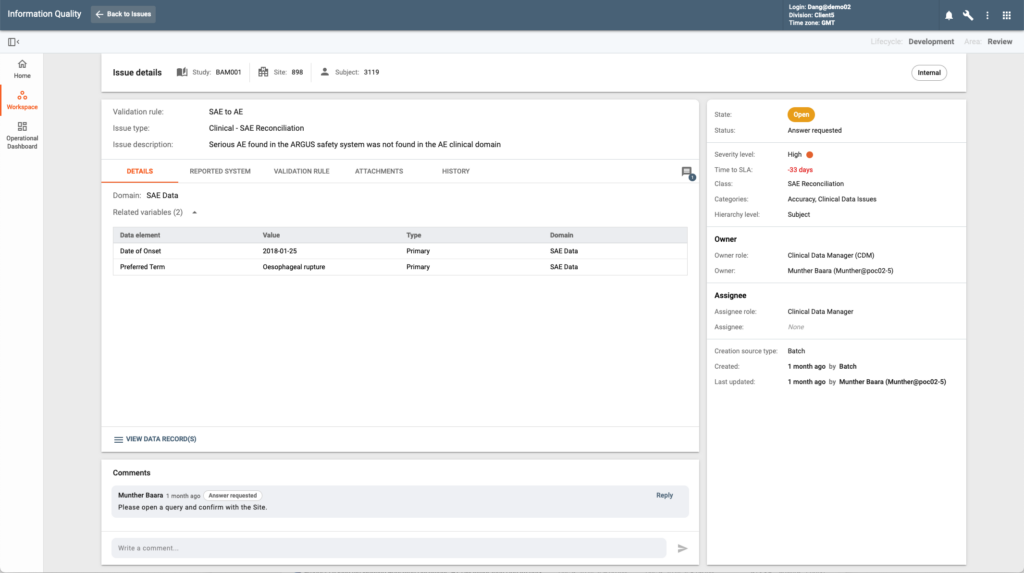

The CONFORM™ Centralized Issue Management System (CIM), with its integrated issue management functionality and flexible, user-configurable design, offers distinct business advantages that set it apart from other solutions.

This product collects and tracks any and all issues that occur during study conduct. Issues can be system and manually generated. in line with EDETEK R&D Cloud open eco system issues can be created by CONFORM™ applications and users or by external systems via the provided integrations. A single system of study issues ensures comprehensive view on study quality while providing one application where all clinical business functions collaborate on resolutions.

Multiple user-configurable issue types with specific data payloads are supported by the system. This is an important and unique design feature as it enables aggregation of issues produced by different CONFORM™ and external applications regardless of the content. Users can also configure issue type-specific SLAs and resolution workflows.

This study quality centric application ultimately enables pharma sponsors and CROs to consolidate issue and deviation management across all their tools (EDC, CTMS, eTMF, etc.) in CONFORM™ improving efficiency in compliance and collaboration.

Medical and statistical data monitoring is realized via an application called Alerts. Alerts analyze the data in real time as soon as the data refresh is executed in CONFORM™ to address potential patient safety concerns or study conduct deviations at the sites. Real-time monitoring fosters transparency, ensuring rapid issue resolution and maintaining data integrity throughout the duration of the study.

The system provides a user-friendly alert configuration builder that require little technical knowledge and no software coding allowing clinicians and scientists to create executable alerts. Clinical monitoring alerts highlight significant medical observations (i.e. Dose Limiting Toxicity) and the system notify clinicians and/or scientists immediately upon alerts creation.

Statistical alerts identify more complex anomalies in either clinical or operational data (i.e., fraud detection) that require the use of coded mathematical algorithms. These statistical programs are stored within CONFORM™’s SCE and linked to Data Monitoring.

Both Clinical and Statistical alerts are actionable and tracked to closure. For example, an alert can be easily converted to a site query.

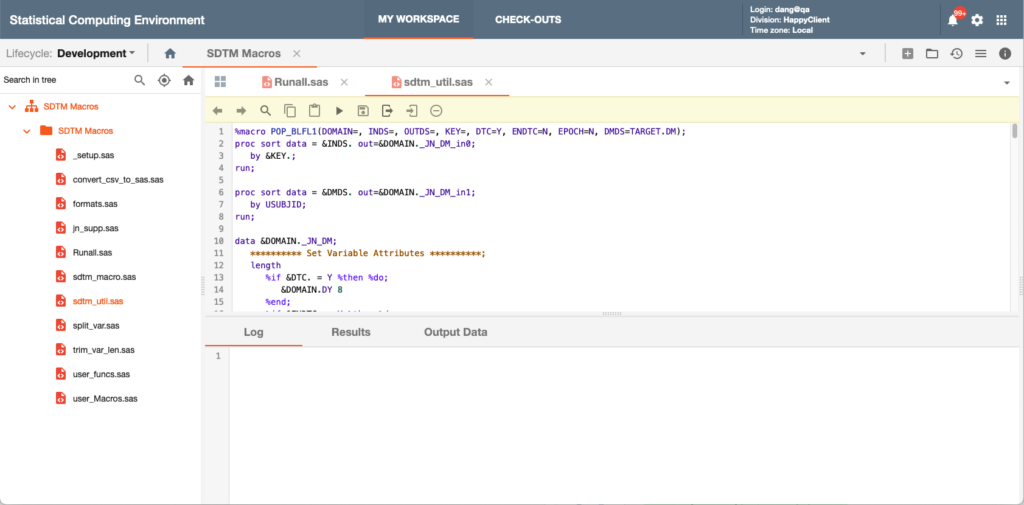

This product addresses many challenges faced by clinical programmers: modern Web-based user interface, ability to support multiple programming languages (SAS, R, Python), version control, reproducibility, global access, cloud-native scalable computing power, deep integration with cloud-based storage, security (projects and data), study metadata-based attributes and ability to view statistical output, data and documents all in the same application.

SCE’s interface allows users to create new programs with full version control, inherit code from the Global Macro Library, utilize static or live data feeds(via the Data Ingestion Gateway) with automatic report refresh, perform code promotion between three lifecycles (Development, Test, Production) and schedule jobs.

The system also offers support for blinded data and cross study statistical programming.

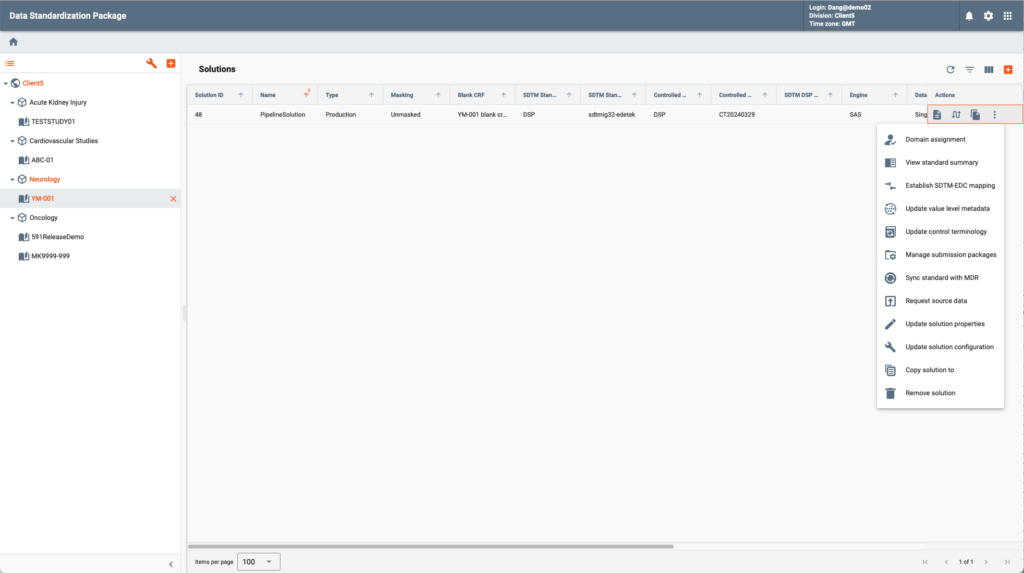

The product is a Web-based application designed for SDTM data and documents production. Users can manage and generate all SDTM deliverables in one system and export them in a submission ready package, which includes: SDTM annotated CRFs, SDTM datasets in sas7bdat and xpt formats, SDTM SAS scripts in .SAS format (executable), Programming specifications, Define.xml, and cSDRG.

This application provides a visual SDTM data flow designer and an automatic SDTM programming code generator. Code generator allows users to choose between SAS and R programming languages. Custom defined user macros can be inserted and re-used.

The product supports all published versions of the CDISC SDTM IGs, and optionally utilizes AI/ML algorithms to assist with SDTM annotations and mappings.

TLF is a standards-based statistical analysis application that improves the efficiency and accuracy of submission quality TLF design and publishing.

TLF is a design tool that supports customizable layouts, flexible formatting, various types of graphical outputs, etc.

Similarly to other CONFORM(TM)’s statistical applications TLF is integrated with the Platform’s Intelligent Metadata Hub to support enterprise features of governance, versioning, etc.

This application ensures that datasets meet CDISC standards and regulatory requirements, reducing the risk of rejection by agencies like the FDA or PMDA. It identifies and resolves issues early in the submission process, ensuring clean, high-quality data for analysis and review.

The system incorporates and runs all approved CDISC and FDA validation rules for a particular IG, it identifies and presents issues to the users. User defined custom rules can be added to the system.

The application can execute validation rules stand-alone against submitted datasets or it can be a part of the CONFORM™ Clinical Data Pipeline where it could be configured to run automatically on every data refresh.

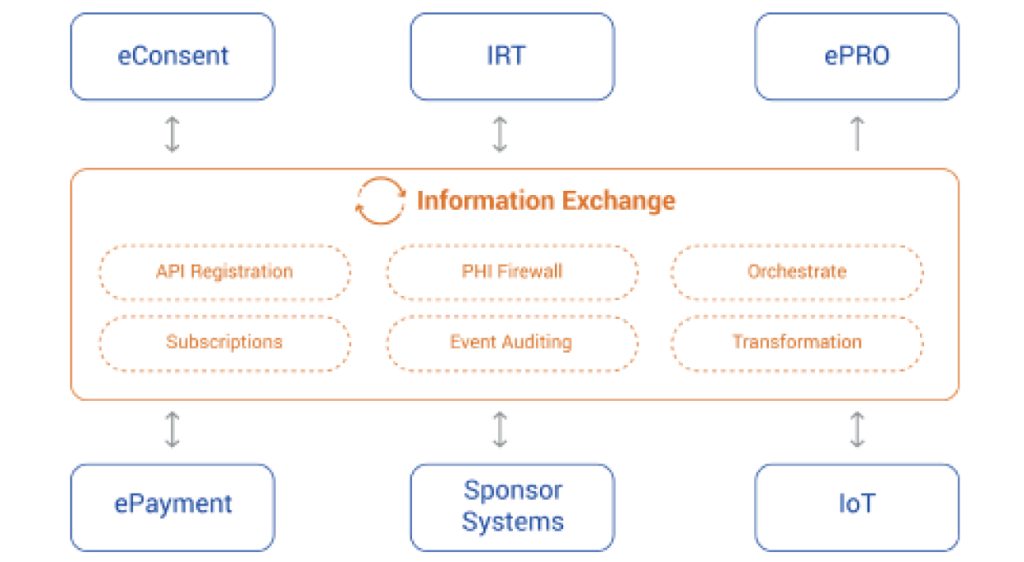

The Orchestration and Collaboration Manager provides real-time data exchange and collaboration for CONFORM™ applications and users as well as external clinical systems involved in a clinical trial. OCM also enables open event-driven architecture and real time integrations for the EDETEK’s R&D Cloud.

This product is a set of tools for management and automatic distribution of clinical and technical events that occur during study conduct. It includes management of event metadata, configurable event subscriptions, notifications and workflow management. Key functions also include end-to-end process logging, auditing, compliance tracking and real-time operational and scientific aggregation and analytics of study events

The Clinical Data Pipeline (CDP) serves as the backbone for clinical data processing, dynamically handling incoming files and transforming them into the target model as defined by the CONFORM™’s Intelligent Metadata Hub (IMH). The CDP verifies that the incoming data adheres to data provider specifications and flags any discrepancies. It also executes cross-data provider validation rules ensuring consistency and reliability.

CDP efficiently handles newly ingested files, automatically identifying data changes and new data issues while automatically closing any prior validation errors that are no longer applicable. In addition, it keeps a complete audit trail of all changes.

CDP is resilient in its processing. It handles patient data exceptions without failing to process other patients. Furthermore, business events are defined and triggered to notify stakeholders of critical execution issues, ensuring enhanced process oversight with timely responses.

CDP optimizes the durability, performance, and stability of the process through the advanced and scalable cloud design, ensuring seamless operation for various business functions without disruption to end users or downstream integrations. The pipeline’s unique object model offers a flexible data structure that remains resilient to study amendments, enabling reliable loading, validation, and transformation of data.

The Single Sign On (SSO) solution offers compatibility with leading identity providers such as Okta, Azure AD/Entra, Google Workspace, as well as on-prem deployment from all major IDP vendors. Support for multiple SSO is available. This flexibility allows CONFORM™ sponsors and stakeholders to use their own sanctioned credentials, reducing complexity and eliminating barriers to system access.

Managing authentication shouldn’t slow down innovation or increase risks. CONFORM™ R&D Cloud SSO reduces friction, enabling organizations to streamline processes, increase security, and ensure uninterrupted access to critical systems. This technology allows collaborators to log in using their preferred systems while meeting the highest security standards.